Adobe announced new upgrades for its Firefly video model on Thursday, and introduced new third-party artificial intelligence (AI) models that will be available on its platform. The California-based software giant stated that it is now improving the motion generation of its Firefly video model to make it more natural and smoother. Additionally, the company is adding advanced video controls to let users generate more consistent video outputs. Further, Adobe also introduced four new third-party AI models that are being added to Firefly Boards.

In a blog post, the software giant detailed the new features and tools Adobe Firefly users will soon receive. These features will only be accessible to the paid subscribers, with some of them being exclusive to the web app for now.

Adobe’s Firefly video model already produces videos with realistic, physics-based motion. Now, the company is enhancing its motion generation capabilities to deliver smoother, more natural transitions. These improvements apply to both 2D and 3D content, enhancing motion fidelity not just for characters but also for elements like floating bubbles, rustling leaves, and drifting clouds.

The recently released Firefly app is also getting support for new third-party AI models. Adobe is introducing Topaz Labs’ Image and Video Upscalers and Moonvalley’s Marey. These will be added to Firefly Boards soon. On the other hand, Luma AI’s Ray 2 and Pika 2.2 AI models, which are already available in Boards, will soon support video generation capability (currently, they can only be used to generate images).

Coming to new video controls, Adobe has added extra tools to make prompting less exasperating, and reduce the need to make inline edits. The first tool allows users to upload a video as a reference, and Firefly will follow its original composition in the generated output.

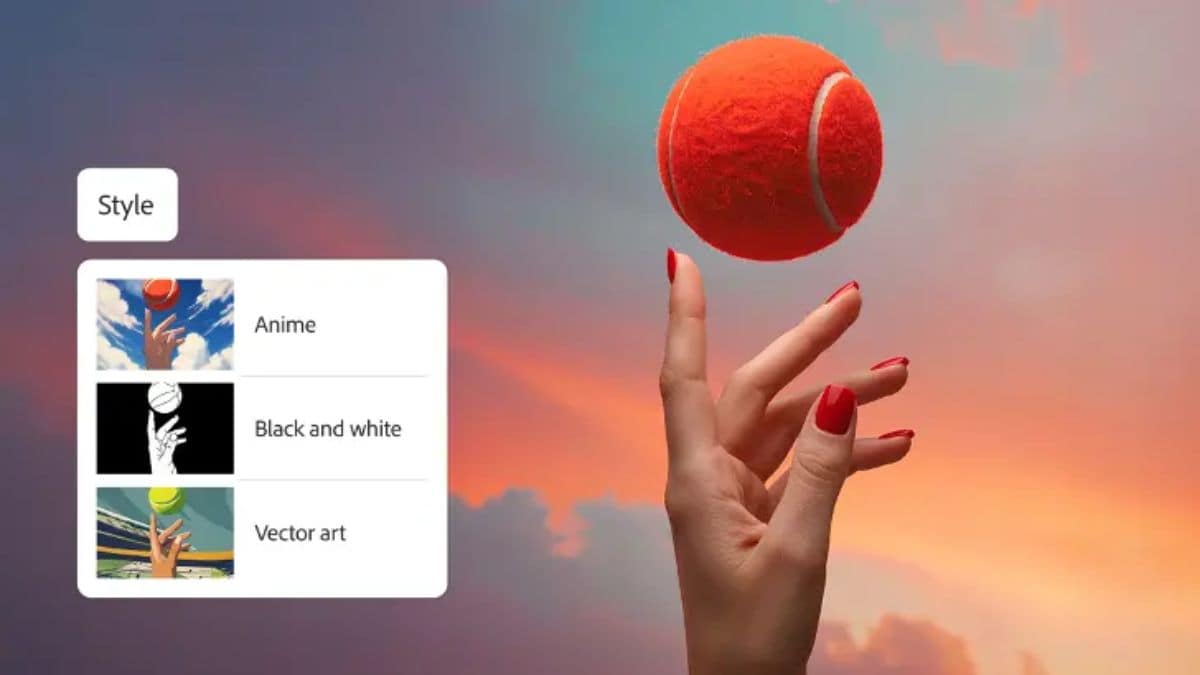

Another new inclusion is the Style Preset tool. Users generating AI videos can now choose a style such as claymation, anime, line art, or 2D, along with their prompt, and Firefly will adhere to the style instruction in the final output. Keyframe cropping is also now possible at prompting stage. Users can upload the first and last frames of a video, and Firefly will generate a video that matches the format and aspect ratio.

Apart from this, Adobe is also introducing a new tool, dubbed Generate Sound Effects, in beta. The tool allows users to create a custom audio using a voice or text prompt, and layer it on an AI generated video. When using their voice, users can also dictate the timing and intensity of the sound as Firefly will generate custom audio matching the energy and rhythm of the voice.

Finally, the company is also introducing a Text to avatar feature that converts scripts into avatar-led videos. Users will be able to select their preferred avatar from Adobe’s pre-listed library, customise the background, and even select the accent of the generated speech.